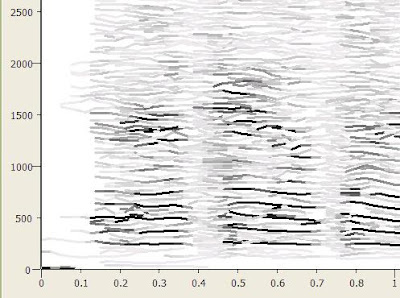

So I've had my Korg DS-10 for almost a month now and have assembled my general thoughts below

The Synths

There are only two of them, which is somewhat restrictive. Although, supposedly you can daisy chain these mini Korgs together to make a tiny retro orchestra, so the problem could be circumvented if that's really what's holding you back.

I love the patcher that comes with it, even if it is small (the random value generator pretty much sold me) and the synths themselves come with a whole host of ADSR envelope goodness.

Possibly the best thing about the synths are their several modes of input. Besides the keyboard and sequencer (by the way, the sequencer is quite robust--allowing you to change the gate, volume, and panning of each note), the "Kaoss" touch pad rocks my world. If you're going to use the DS as a musical instrument, the Kaoss pad is the way to go. You can configure the X and Y axes to anything like pitch, volume, or panning. Then any (x,y) coordinate you touch will activate the synth with those parameters.

The drum synths get special treatment, with four voices dedicated to the overall drum track.

The Patterns

The patterns are only 16 beats long, so if you're in 4/4 and want to be able to play 16th notes, that means each pattern is only a measure long. And, since each song holds only 16 patterns, that means only 16 unique measures of music before you have to repeat something.

The demos on the DS-10 site are particularly bad in this respect, as they just show someone choosing patterns in sequential order (which they just as easily could have programmed into the Song Sequencer).

I give XSeed a lot of props for allowing you to change the size of a pattern to arbitrary length, meaning complex time signatures (7/8 or 3/4 instead of just 4/4) are possible.

The Song Sequencer

Is also unbearably short, weighing in at 100 consecutive patterns. I composed a basic song as an example. It uses all 100 patterns and at 130bpm is less than 3 minutes in length. Clearly the Korg DS-10 is not meant for composing elaborate symphonies, but is better suited for improvisation on top of a background that repeats.

The Effects

The DS-10 comes with some nice effects, including chorus, flanger, and delay. Unfortunately the interface is sort of confusing, and I often find myself wanting to add more than one effect to a synth.

The Interface

is minimal, but not beautiful. What's sad is that I really believe they could've reached a much broader audience if they just took the time to make the interface more polished and friendly, since the 'game' itself has a pretty shallow learning curve.

The Verdict

I'll be using my DS-10 primarily for it's synth capabilities... playing with the patcher, and using the Kaoss touch pad probably more than anything else. While the patterns and song sequencer are helpful, I don't see them being able to handle more complex compositions.

That being said, This thing is pretty much a techno machine-beast. Rawk.